Big Data Integration (Overcoming the Challenges)

Big data is collected from many different sources in many formats. How can you best integrate the data to process and analyze it?

What is big data integration? Big data integration refers to combining data from multiple separate business systems into a single unified view to process it and generate insights.

What Is Big Data Integration?

Data integration is a common practice in modern, data-driven enterprises. Data integration includes data into critical infrastructure, decision-making, and operational considerations to inform best practices and optimization. This process can encompass nearly every aspect of a business, including supply chain, administration, and customer service.

Then, big data integration is simply the inclusion of systems into integration strategies. Big data, in this context, isn’t merely a reference to large volumes of information. Instead, it refers to collecting and managing data streams across dozens or hundreds of potential inputs to drive advanced processes and analytics.

The move from traditional to data integration is one where complexity increases exponentially. As such, there are critical aspects of the process that any enterprise should consider, including:

- Collect Data From Different Sources: Big data systems are predicated on the idea that an organization collects massive quantities of data across multiple, heterogeneous data sources.

- Store Data for Ready Access: It isn’t enough to just store collected data in cloud servers. This information must be readily available for quick retrieval, ideally through available systems, whenever needed.

- Automate Critical Processes: Data processing over big data architecture is, to put it bluntly, often beyond the capabilities of humans using manual processing tools. Automated systems are necessary to help organize, sanitize, and optimize data and data-gathering infrastructure.

- Orchestrate Access, Storage, and Processing: Part of critical automation services are the orchestration mechanisms used to control the overall integration process, from the first collection to storage and processing.

- Provide Business and Scientific Intelligence: The result of any project is its use in analytics and intelligence. In many cases, data integration pushes organizations that rely on that information for massive-scale intelligence powering business operations or research goals. As such, the actual structure of those analytics is critical for any integration project’s success.

Tools for Big Data Integration

That all being said, big data integration calls for tools that can handle the unique needs of these endeavors.

Some of the major tools forming the backbone of a big data integration project include the following:

- Data Lakes: Lakes of data are large pools of unstructured data. Lakes often serve as a stop-off point for incoming data before it is organized and sanitized, as it provides rapid access to information before it makes its way through your data system.

- Data Warehouses: The home for processed, structured data, warehouses are where your mission-critical applications will pull data from as part of their everyday operations.

- Master Data Management (MDM): Master data is the meta-data from which your organization will structure meaning around its big data infrastructure. It defines how collected information fits into the business and operational side of your organization, providing a sort of dictionary for what information is and how it should be processed.

- Integration Platforms: Integration platforms will span the processes and operations that link orchestration, data sources, and data storage into a single, comprehensive project. Such systems can tie directly to specific cloud architectures or fit into several types of hybrid architectures. Major technical providers like Microsoft, Oracle, and IBM offer integration tools, but open- source options also exist.

What Are Some Challenges of Big Data Integration?

As with any complex technical undertaking, data integration has its own challenges. A key part of all these challenges is ensuring that all the disparate components of the solution work well together and that the results gained from any processing accurately reflect the trends in that data.

More specifically, the challenges an organization might face include the following:

- Streamlining Data Ingestion: When gathering data across so many sources, coming in so many different formats and conditions, it’s critical that your integration system can cover those myriad formats and data streams efficiently.

- Managing High-Availability Storage: Data must remain available at all times, and as such, it’s critical to have high-availability storage clusters running. These storage requirements are alongside the necessary data structuring and accessibility speeds serving as the backbone of any solution.

- Providing Relevant Analytics: As of this writing, a machine cannot decide for an organization the analytics that they need to drive them to their ultimate goals. It is still an ongoing challenge to determine those analytics and continually update them to match current and future needs.

What Are Some Components of Big Data Integration Projects?

While several tools and technologies play a part in creating an extensive data integration solution, these aren’t enough to make that solution successful. Since big data analytics touch on almost every aspect of an organization, the organization must align those parts of the organization with that data infrastructure.

Some of the more important administrative and organizational components of a big data integration project include the following:

- Data Governance Policy: Data governance is quickly becoming a necessary part of running any data-driven organization. Governance policies help centralize the security, privacy, and orchestration concerns inherent in large-scale data management. When utilizing an integration approach to analytics, it’s almost impossible to manage effective and accurate analytics without a governance policy feasibly.

- Data Security: When gathering terabytes of data from hundreds of sources, it’s almost impossible to ad hoc secure that data ad hoc based on regulations, industry compliance standards, and personal privacy concerns. Centralized security policies, orchestrated security measures, and scalable approaches to compliance all make big data integration a realistic practice.

- Data Integrity: To properly utilize data from various sources and do so effectively, that data must be in a form that’s useful and usable by the system. Furthermore, to trust analytics from a big data system, the integrity of that data, from the first touch to processing, must be guaranteed. Following these, a component of a project will almost invariably be some integrity verification solution.

- Cloud Platforms: Underlying all of these approaches, strategies, and components lies the cloud infrastructure that drives it. Hybrid, high-performance cloud systems are a complete and unavoidable necessity for any big data project, including any integration approaches.

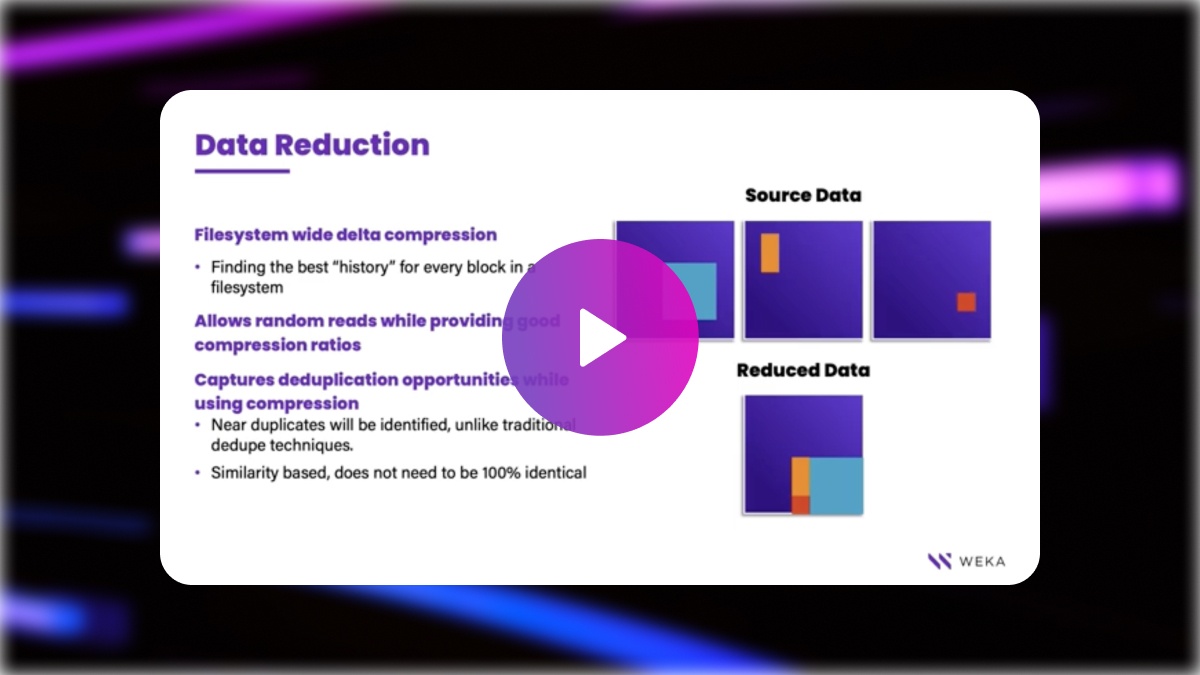

Power Big Data Integration in Your Enterprise with WEKA

Big data infrastructure calls for tailored software and hardware connecting data ingestion, orchestration, processing, and analytics. WEKA provides the high-performance cloud computing platform that can power big data projects with a combination of the following features that include:

- Streamlined and fast cloud file systems to combine multiple sources into a single high-performance computing system

- Industry-best GPUDirect performance (113 Gbps for a single DGX-2 and 162 Gbps for a single DGX A100)

- In-flight and at-rest encryption for governance, risk, and compliance requirements

- Agile access and management for edge, core, and cloud development

- Scalability up to exabytes of storage across billions of files

Contact us today to learn more about high-performance clouds for big data integration.