Solving the Infrastructure Puzzle for Artificial Intelligence

Gartner recently shared some interesting strategic planning assumptions in their public resource Insights on Artificial Intelligence. One strategic planning assumption for artificial intelligence (AI) in this guide really stands out: “By 2025: AI will be the top category driving infrastructure decisions, due to the maturation of the AI market, resulting in a tenfold growth in computing requirements.”

It is no surprise that organizations are rapidly adopting GPU accelerators to tackle their AI, machine learning (ML), and deep learning computational needs. What is surprising is that supporting AI initiatives and workloads continues to be a significant challenge for many data infrastructure and operations teams—in particular, challenges include delivering predictable performance, accelerating time to productivity and value, and keeping costs under control.

Enterprise IT teams have long relied on validated infrastructure reference designs delivered through vendor collaborations to help them reach their desired outcomes faster and at a lower cost compared to do-it-yourself approaches.

WEKA has been working with NVIDIA to help organizations achieve similar benefits with the new NVIDIA DGX BasePOD. This validated reference architecture provides an integrated AI infrastructure design built on NVIDIA DGX systems that incorporates various components and best practices for compute, networking, storage, power, cooling, and more.

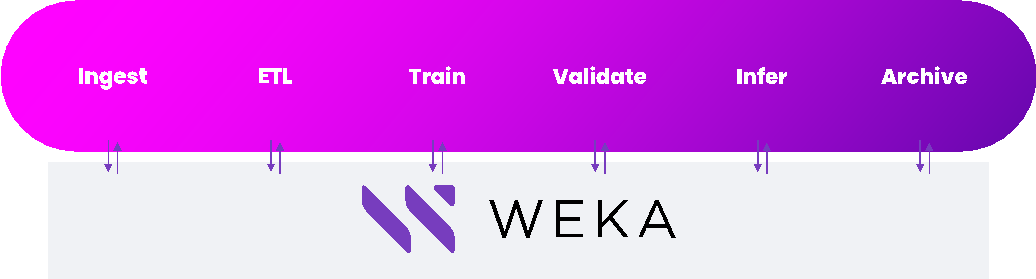

The WEKA Data Platform Powered by DGX BasePOD offers a high-performance, scalable AI infrastructure solution accessible to all. Underpinned by WEKA’s advanced data platform software, this reference architecture delivers the ideal linear scaling high-performance infrastructure for AI workflows.

This is why we could not be more excited about NVIDIA DGX BasePOD, which incorporates new elements such as NVIDIA Base Command and provides new levels of scale, thanks to increased architectural flexibility. WEKA is also proud to support this evolution and for WEKA Data Platform to be a certified high performance storage technology with NVIDIA DGX BasePOD.

The WEKA Data Platform powered by NVIDIA DGX BasePOD will enable us to extend these benefits to our customers at a much larger scale. Key highlights of the new solution include:

- The WEKA Data Platform and NVIDIA DGX BasePOD are now directly applicable to mission-critical enterprise AI workflows, including natural language processing and larger-scale workloads for customers in the life sciences, healthcare, and financial services industries, among many others. WEKA can serve large and small files, across various workload types with ease.

- Organizations can now support every step in their AI workflow without having to compromise between simplicity, scale or performance. Together, WEKA and NVIDIA DGX BasePOD deliver linear scaling with extremely high performance; scaling capacity and performance is as simple as adding DGX systems, networking connectivity, and WEKA nodes – there is no need to perform complex sizing exercises or invest in expensive services to grow.

- WEKA continued innovation and support of Magnum IO GPUDirect Storage technology provides low-latency, direct access between GPU memory and storage. This frees CPU cycles from servicing the I/O operations and delivers higher performance for other workloads.

We want to hear from you: Contact us for a technical deep dive on the WEKA Data Platform powered by NVIDIA DGX BasePOD and see firsthand how we can help to solve the infrastructure challenges holding your AI initiatives back.

Also, reach out to see if your team qualifies for a complementary technical design workshop with WEKA and NVIDIA DGX BasePOD, which can help you navigate the design-and-operations best practices for storage and networking to maximize the value of NVIDIA GPUs and DGX systems. We will also touch on integration with MLOps providers to get more models into production, faster.

© 2022 WekaIO, Inc. All Rights Reserved. All product names, logos, brands, trademarks, and registered trademarks are property of their respective owners.