Hot Take: Real Customers, Real Benchmarks

Supercomputing ‘23 is over, and with it always comes one of the more interesting outcomes: An updated IO500 list! This year, The IO500 broke new ground by adding a new category: The Production List. Why is this a big deal? Because IO500 is now recognizing that high-performance computing (HPC) environments are increasingly moving into the enterprise space, where expectations for reliability, availability, and serviceability become much higher and, frankly, are table stakes. Kudos to the IO500 team for leading the way in introducing disclosure about how these HPC systems are deployed in production.

As I’ve written about before, I’m not typically a fan of benchmarks with limited disclosure about how systems are built, and the IO500 was one of them. However, this appears to be changing. As part of the move to a production list, the IO500 now has a rating for the reproducibility of the results. To get a top rating, you must disclose numerous details about the environment and the tuning needed to achieve the results so that anyone can duplicate the environment. The top rating for this is called ‘Fully Reproducible.’

Memorial Sloan Kettering Cancer Center, a WEKA customer, is among the preeminent cancer research organizations in the world. The center recently decided to take its IRIS research cluster and run the IO500 benchmark on it to see what result it could achieve.

And what a result it was! Using a moderately sized WEKA cluster consisting of 22 servers, each with eight 15TB NVMe drives for a usable 2.1 PiB (2.35 Petabyte) of capacity, Dual 200Gb InfiniBand links, and then connected to 36 client nodes in the compute cluster with a mix of CPUs and GPUs attached. This configuration produced a top 5 score of 308.94, pushing over 104GiB/sec and 910kIOP/sec with the IO500 workload – all while maintaining a full reproducibility rating!

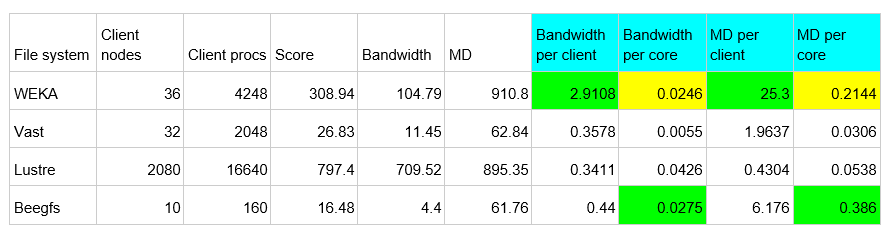

Big numbers are great to show, but the devil is in the details. If we look at some results from the production list, we see some interesting data:

If we break down the overall scores and normalize them to account for the differing sizes of the systems for both clients and storage environments, we see that WEKA is either a leader by a massive 4-25x depending on the category or is a very close second–less than 45% behind the leading score in one category, while only 12% in another.

The results highlight two key things: First, the flexibility of WEKA in driving performance across a wide range of IO patterns. We’re not tuned to focus on a specific singular workload. Second, This result shows exceptional efficiency in using resources in the environment. Being able to drive very high performance per core on each client means you won’t have expensive GPUs idling along while waiting for IO. With the cost of GPUs in the tens of thousands of dollars per card, this must be considered.

Congratulations again to Memorial Sloan Kettering on this impressive result. We – and everyone else on the planet – hope this system helps to accelerate your cancer research.

Want to know more? Ping me at joel@weka.io, LinkedIn, or contact info@weka.io for more information.