Building Intelligence That Sees and Creates

Generative AI company Luma AI achieves major efficiency gains with NeuralMesh™ by WEKA®, reaching 40x faster training startup times while gaining easier scaling and improved reliability.

How Luma AI Accelerates Every Experiment with WEKA

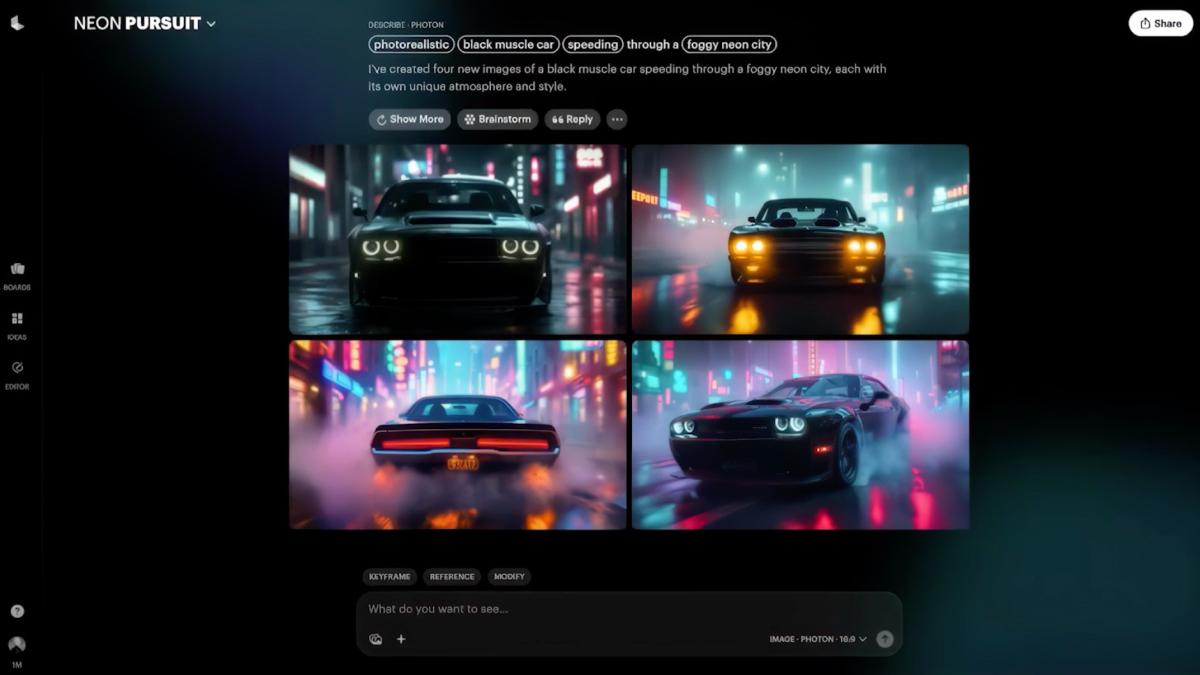

At Luma AI, the mission is bold: build multimodal AI to expand human imagination and capabilities. The company is training world models that jointly learn from video, audio, and language — similar to how the human brain learns — to enable AI systems that can see, hear, and reason about the world.

With flagship products like Dream Machine, Luma AI has enabled millions of creators to generate cinematic-quality videos from simple text prompts. Behind these breakthrough products is a team of world-class researchers and engineers who have invented foundational technologies including DDIM, the VAE Bottleneck, Neural Radiance Fields, and advanced neural compression.

Thomas Neff, Head of Systems Research & Engineering at Luma AI, leads efforts that enable the company to scale both training and inference operations. His work focuses on making sure the infrastructure can support the team’s ambitious goal of building powerful and collaborative products for creatives.

“What excites me most is working with the team to scale up our training runs. In the last 12 months alone, we’ve made significant strides and enabled capabilities that weren’t possible before.”

The Challenge

Building Tomorrow’s AI on Yesterday’s Systems

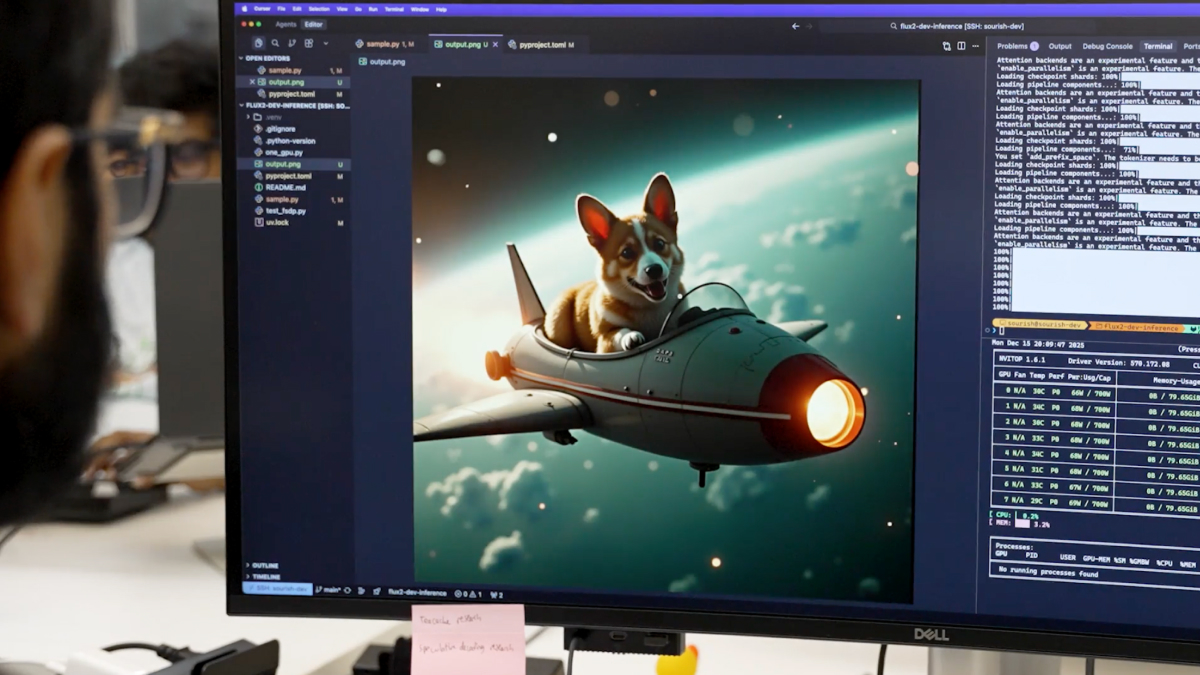

As Luma AI’s research progressed, their infrastructure struggled to keep up. The company operates hundreds of NVIDIA H100 nodes for training across multiple clusters, processing petascale datasets. But as experimentation accelerated, critical bottlenecks emerged.

The multi-modality challenge was fundamental. “For natively multi-modal models, there’s pretty much nothing out there,” Neff noted. “We need to build a custom stack for both training and inference that supports these capabilities.”

While language models and pure video models have abundant infrastructure support, Luma AI’s pioneering work combining video, audio, and language required building everything from scratch.

Additionally, performance bottlenecks plagued their training infrastructure. FSx for Lustre, which initially provided adequate IOPS, was now topping out during large-scale training jobs. Environment loading caused long delays during training runs as Luma AI’s numerous H100 nodes sat idle, burning computational cycles and energy as they waited.

Further, managing Python environments across hundreds of nodes had become troublesome. “Everyone tries to do something slightly differently. They need to change one small thing in their environment, then run a script that pushes it to all the nodes—which took 20 minutes,” Neff recalls. “Beyond being frustrating, it was extremely difficult to support. People contacted our experts daily because their environments weren’t working. It was painful.”

The impact was clear: “If it takes a researcher half a day to debug an environment setup issue, that delays a model training run or experiment by a day or more. These issues can directly impact shipping deadlines and product releases.”

The team needed infrastructure built for the demands they were placing on it.

The Solution

NeuralMesh Provides Performance Without Compromise

The team at Luma AI knew they needed a different approach. They needed storage that could handle thousands of processes simultaneously and something that would make environment management simple. That’s when they first began exploring using NeuralMesh.

The team ran a proof of concept, and the results spoke for themselves. The pain points they’d been living with—the environment distribution headaches, the IOPS limitations, the support burden—began to evaporate.

“Once we started the POC with WEKA, we saw that it resolved the issues that we had. Back then, there were not a lot of people to manage or even maintain what we had. WEKA freed us up so we could work on more important things.”

NeuralMesh provided what Luma AI had been looking for: an adaptive, high performance storage system that could be accessed from all nodes, enabling seamless sharing of Python environments, code, and experimental data—all while maintaining the performance needed for intensive training workloads.

“We maintained the convenience of sharing environments on distributed storage without constantly moving files to the local disks of every node,” Neff notes. The system handled massive throughput without breaking a sweat—thousands of processes simultaneously pulling data without performance degradation.

The implementation was straightforward, with strong support from the WEKA team throughout. The solution integrated smoothly with Luma AI’s existing infrastructure, working alongside their S3-based data pipelines for training data. “We had an excellent experience getting it set up and resolving our previous concerns,” Neff recalls.

The Impact

Liberating Research from Infrastructure Limits

NeuralMesh transformed both the numbers and the daily experience of Luma AI’s researchers.

The performance gains were dramatic. Environment loading times became 40 times faster, going from 10-20 minutes to 30-60 seconds. Training runs that used to require 20 minutes to launch now start to run a minute or less. H100 GPU nodes that used to frequently sit idle waiting for the environment to be loaded now achieve 95-100% utilization much faster, with GPUs consistently drawing 650-700 watts per run, delivering maximum computational efficiency and return on investment.

NeuralMesh resolved the rate-limit issues that had previously plagued Luma AI’s large-scale training jobs. The team can now run more experiments in parallel and iterate faster on model development, working seamlessly across their datasets.

“We can take advantage of all the scaling laws by launching larger deployments without having to worry about startup times,” Neff noted.

The business impact extended beyond raw performance. Researcher productivity improved dramatically. New team members become productive quicker with access to shared environments—no complex onboarding, no waiting for environment configuration.

“When our research moves faster, we can iterate on our products faster, which directly impacts what we ship.”

Core Benefits of NeuralMesh

Efficiency

Thousands of processes can hammer the system without performance degradation.

Convenience

Multiple people can access and share environments simultaneously without conflicts.

Reliability

The system operates with minimal issues.

“I don’t think we’ve had many or any issues with it at all,” he says. NeuralMesh interfaces like any other file system but delivers the speed of high-performance network storage.

The transformation freed the systems team from firefighting to focus on innovation. Beyond direct improvements, Luma AI anticipates indirect cost savings through more efficient compute utilization, reduced downtime, and eliminated idle GPU time.

Looking Forward

The Next Frontier of Creative Intelligence

For Luma AI, the partnership with WEKA is about building the foundation for the future of worldbuilding and multimodal intelligence. As they continue pioneering AI systems that enable creative expression for millions worldwide, infrastructure purpose-built for petabyte-scale workloads becomes essential—not just for storing data, but for unlocking the breakthroughs that expand human imagination.

“Working with NeuralMesh helps me and my team run experiments faster,” Neff concludes. “If I could go back and tell my past self one thing about choosing NeuralMesh, it would be this: it’s faster than you think.”