How GPUDirect Storage Accelerates Big Data Analytics

Performance of GPU Accelerated AI

GPU-accelerated computing has traditionally been associated with high performance computing (HPC) and more-recently with AI/machine learning. However, RAPIDS suite of software libraries now enables end-to-end data science and analytics pipelines entirely on GPUs.

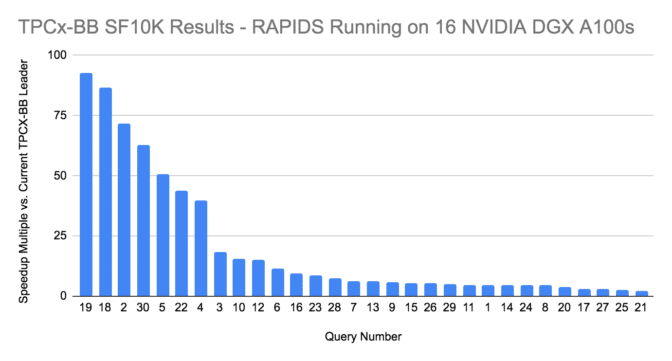

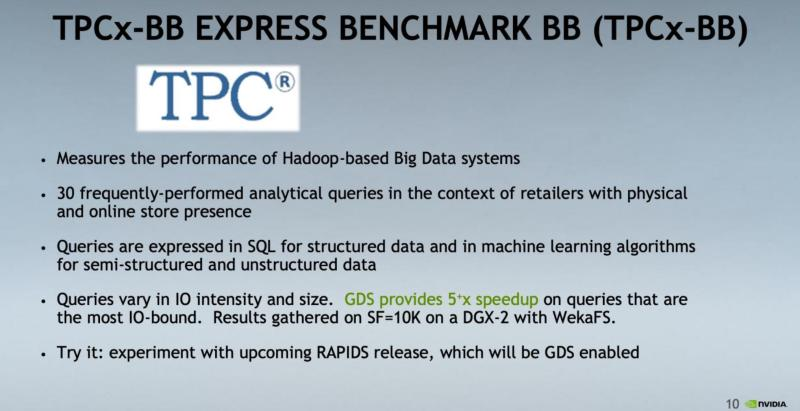

The performance impact of GPU-accelerated computing & RAPIDS was recently illustrated by NVIDIA’s unofficial TPCx-BB result, where it beat the previous record by 19.5x! TPCx-BB is a big data benchmark for enterprises representing real-world ETL (extract, transform, load) and machine learning workflows. The benchmark’s 30 queries include big data analytics use cases like inventory management, price analysis, sales analysis, recommendation systems, customer segmentation and sentiment analysis.

GPU accelerated compute nodes have more than 10x the compute density of CPU-based systems. It is therefore a huge challenge for traditional data storage systems to keep those GPUs busy with data at high performance and low latency. To combat this challenge NVIDIA has a new feature, GPUDirect Storage, that bypasses the CPU & memory enabling GPUs to communicate directly with internal or shared data storage systems.

NVIDIA is an investor in WEKA (WEKA), manufacturer of the WEKA. Today, WEKA is one of only a handful of storage technologies that support GPUDirect storage. WEKA also has unique capabilities in its ability to handle small files, random IO and metadata-intensive workloads as simply as it handles large files and sequential IO workloads.

GPUDirect Storage Results

On its recent GPUDirect Storage webinar, NVIDIA chose to illustrate further performance improvements on the TPCx-BB benchmark (separate to the previously referenced results) through the use of GPUDirect Storage and WEKA. The image below is taken from that webinar and highlights that with WEKA, GPUDirect Storage provides a further 5x speedup on TPCx-BB results that are the most IO bound.

These results show that the data storage solution should be considered carefully when transitioning to GPU-accelerated computing. Big data analytics can hit the turbo button with GPU acceleration. But, just like a car’s turbo brings more air into the compression chamber, the right data storage solution can feed more data into the GPUs. WEKA is GPUDirect Storage ready, container-enabled and built for modern analytics with scale-out performance and scale-out capacity on industry-standard hardware.

WEKA is seeking beta customers for NVIDIA GPUDirect Storage. If you would like to be considered you can register your interest here.

Additional Helpful Resources

Microsoft Research Customer Use Case: WEKA™ and NVIDIA® GPUDirect® Storage Results with NVIDIA DGX-2™ Servers

GPU for AI

How to Rethink Storage Architecture for AI Workloads

Achieving Groundbreaking Productivity with GPUs