WEKAIO AND HITACHI VANTARA PARTNER TO SOLVE THE CHALLENGES OF HPC STORAGE, DATA ANALYTICS AND AI/ML WORKLOADS

We are pleased to announce that WekaIO™ (Weka) has entered into an OEM agreement with Hitachi Vantara for Weka’s industry-leading scalable file and HPC storage software, WekaFS, for data-intensive applications. The partnership with Weka combined with new Hitachi Content Platform (HCP) capabilities will help customers gain faster access and insights from skyrocketing unstructured data.

The partnership was driven by feedback from our customers and partners on the new workloads which are inhibited due to access to their most strategic assets – their datasets – and the need for new data architectures to meet these new requirements. Data has become the new currency and how businesses are able to derive actionable intelligence, derive 1st mover advantage, operationalize and govern their data, is going to determine their competitive differentiation and success in this digital economy. We are seeing this across many use cases including Autonomous vehicles, Precision medicine, Drug discovery using Cryo-EM, financial services (FSI), risk analytics, and fraud detection to name a few.

New Workloads driving new architectures

Multi-workload convergence amongst traditional high-performance computing (HPC), High Performance Data Analytics (HPDA), and AI/ML markets, are forcing new requirements on the underlying traditional storage stacks. Businesses are quickly learning the need to architect, deploy, and manage storage services differently.

AI and GPU Computing have penetrated the traditional HPC and HPDA applications. The use cases are moving from computer vision to Conversational AI, Natural Language Processing / Natural Language Understanding (NLP / NLU), and multi-modal use cases. Recommendation engines from Internet giants are now using deep learning, while you see low latency inference used for personalization (LinkedIn), speech (Nuance / Cerence), translation (Google), and video (YouTube) use cases. Similarly, deep neural networks (DNNs) are becoming more complex, as with the several billion hyper-parameters of BERT and Megatron, driving massive parallelism and several petaflops of compute. Equally important is the ability to cater to performance at scale, with use cases such as autonomous vehicles. A single survey car being used to achieve a goal of SAE 2+ autonomy easily generates 2 PB of datasets per year.

Data Analytics is moving from predictive (what-if) to prescriptive ( what action) to cognitive ( leveraging AI) with Hadoop, Spark, SQL query engines getting accelerated with a single source of truth in the form of data hubs. Similar transitions are seen in life sciences with precision medicine and next-gen sequencing use cases as well as Cryo-EM pipelines, which have mixed workload requirements at scale. Hedge funds, brokerage firms, and trading Houses are increasingly using real-time and historical tick datasets to build models for backtesting, risk analytics and predictive analytics.

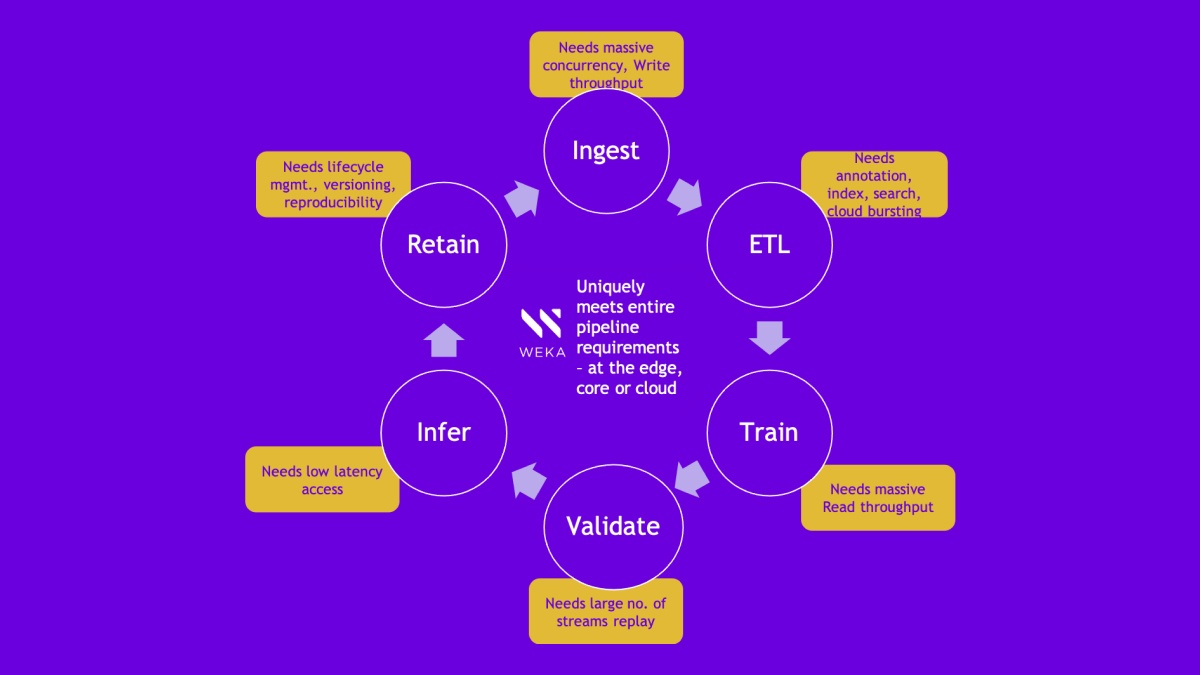

All of these transitions result in different data pipeline stages that have distinct data (and hence storage I/O) requirements for massive ingest and training bandwidth, mixed read/write handling, and ultra-low latency, resulting in the need for massively parallel storage access. Equally important is the ability to provide data versioning and immutable backup copies.

HPC storage architectures using traditional direct-attached NVMe storage (DAS) or network attached storage (NAS), limit performance and data mobility. In addition, NVMe block storage solutions (SAN) lack the shareability and parallelism to deliver timely insights at scale for these new workloads and architectures. This means that business and IT leaders must reconsider how they architect their storage stacks and make purchasing decisions.

Digital Transformation with Accelerated DataOps – It’s all about your data!

Data has become the most important strategic asset to digital businesses for launching new business models, faster time to market, and competitive differentiation. Accelerated DataOps – Data Management in the AI era – determines how well businesses can derive actionable intelligence, operationalize pipelines, and provide governance and trust. It is going to determine success in the digital economy.

Marriage Made in Heaven

The OEM agreement brings together category leaders Weka, for high performance distributed file systems, and Hitachi Vantara, for best of breed storage architectures for data analytics. It will enhance Hitachi Vantara’s portfolio with a high-performance, NVMe-native, parallel file system that the company will deliver tightly coupled to an HCP datastore. It will be offered with Hitachi Content Platform (HCP) object storage, to deliver a single engineered solution tuned to meet the massive parallelism, performance and capacity demands of mixed workloads common in Financial Services Industry (FSI), artificial intelligence (AI), Lifesciences, and high-performance computing (HPC). This network-attached storage (NAS) solution that combines the unmatched performance of the Weka File System with the unbeatable scalability of Hitachi, will reduce the high cost of storage management overhead running AI and analytics workloads, increase business agility, and improve time to market, across a broad array of industries.

Please stay tuned for more information on the joint solutions born out of this relationship. We are confident that the combined HPC storage solution will meet the stringent technology and economics requirements laid out by our customers and partners during these unprecedented times.

Additional resources: