WekaIO Software-Defined Storage Powered by NVIDIA BlueField

Hardware accelerators have been used in the computing industry for many years, there are multiple examples for such accelerators. Field Programmable Gate Arrays (FPGAs)are used to perform high rates of specific operations, and are very common in the financial industry. Graphics Processing Units (GPUs) can perform massive amounts of floating-point computations mostly common in AI and ML, but also other environments that can benefit from the huge number of cores they contain. Tensor Processing Units (TPUs) are highly efficient at matrix multiplications and therefore used in neural network training. Plus there are ASICs and others.

These types of accelerators enable specific operations that CPUs offload to the hardware, creating an environment in which the CPU will have an increase in cycles and therefore available to perform additional operations on behalf of the applications running on the compute server. Additionally, the accelerator is better suited to handle these specific operations, allowing for a more efficient data center.

NVIDIA BlueField offloading CPU utilization

The introduction of NVIDIA BlueField technology provides additional benefits. The same two initial advantages are still relevant, in that some of the workloads can be offloaded to the BlueField DPU’s networking accelerators and Arm Processors, relieving the server’s CPU. With BlueField being located on the NIC, it’s also more efficient in accelerating the network layer (TCP, RDMA), accelerating network encryption (IPSec, TLS), and providing strong security isolation. NVIDIA BlueField also offers the option to offload all the software tasks from the server’s CPU, removing the need for separate server CPUs and decreasing the cost and power requirements. A third advantage is the ability to have a predictable running environment, running storage, networking, and security software on the same BlueField DPUs regardless of which server platform is used. For example, the BlueField Linux OS is the same whether the BlueField is deployed in a Linux or Windows server. This allows for consistent multi-platform software functionality and saves a lot of specific engineering efforts that would have been required to adapt the software to different server platforms.

Weka Data Platform Support for NVIDIA BlueField

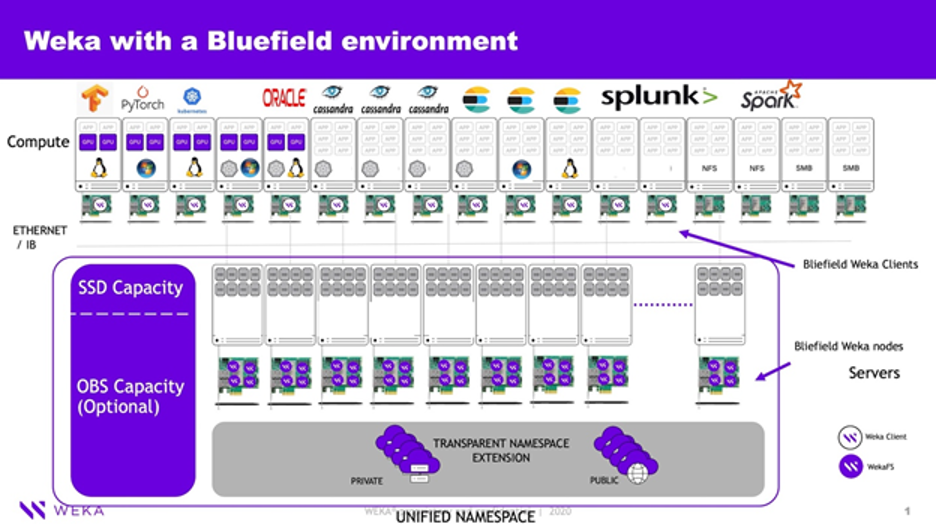

Having written a fully software-defined filesystem, the Weka Data Platform can be used on multiple server hardware types as well as cloud instances. The only requirements are the following: using servers with NVMe SSDs; connecting them with high speed networking (such as NVIDIA’s ConnectX Ethernet or InfiniBand adapters); and keeping it simple–no need for any RAID controllers, JBODs, JBOFs SCM or even UPSes. Weka was written to perform all of these activities as an integrated part of its data path, which means it can be done more efficiently than relying on external hardware components that increase TCO and overall solution complexity. This design consideration also allows the Weka Data Platform to easily utilize new technologies as they are released (or in most cases even prior to their release (e.g. https://www.weka.io/wp-content/uploads/files/2021/03/Supermicro-Servers-Excel-as-WekaIO-Ref-Architecture-v4.pdf), including new CPUs models, new NVMe SSDs, faster interconnects (e.g. PCI Gen 4), as well as new Network Adapters such as the NVIDIA BlueField DPU product line.

The Benefits of WekaFS and NVIDIA BlueField

Here are some of the interesting use cases that are being explored and tested with the Weka Data Platform and BlueField, joint solutions that can change how we design and use storage and provide better TCO as well as performance:

– Release more CPU cycles to the compute server – Each server is burdened with CPU cycles diverted for IO operations and networking; these CPU cycles are taken away from what can be used by the applications. Having the ability to offload the Storage-side CPU cycles to the BlueField Arm cores will release more CPU cycles to the applications, in some cases up to 20% of the CPU cores are returned to the applications. This is an immediate large performance and efficiency gain–i.e. imagine a data center with 10s or 100s of compute servers suddenly getting a 20% boost in CPU core count.

– Easily support multiple hardware and operating system configurations – The BlueField DPU can run a different operating system than the server that it is connected to. This allows the same or similar Weka client component to run on multiple different hardware configurations and different operating systems without the need to develop and support each operating system and hardware environment separately, or to be affected by each configuration’s versioning and patching requirements (to some degree).

– Decrease cost and simplify solution – The BlueField DPU provides the ability to disaggregate Weka storage components and for some configurations, to completely eliminate the need for traditional CPUs. For example, the JBOFs with BlueField DPUs do not need x86 CPUs when the Weka storage nodes software is running on the DPUs only.

– Increase performance – Since the BlueField DPU supports DPDK and SPDK technologies, some of this functionality can be completely offloaded to the hardware, providing faster performance.

– Develop once – With the NVIDIA DOCA development environment, we can develop solutions on the current BlueField DPU and future proof the solution so it can be run on future versions of the BlueField product line.

– Offload additional functions to the BlueField hardware — Additional functions such as storage encryption, network encryption, data decompression, telemetry and security monitoring can also be moved from the CPU to the BlueField DPU.

In today’s world of widespread hybrid cloud deployments and increasing use of AI technology, high-performance distributed storage is needed by both enterprises and service providers. WekaIO and NVIDIA are integrating scale-out storage software with the BlueField DPU to deliver highly scalable, software-defined storage solutions with increased performance, efficiency and security to help customers build the next generation of data centers.